In attempt to standardize, future proof, and keep up with technology standards, organizations are challenged to provide a set of standards in how solutions are architected and implemented. This is reasonable, and I’ve often been tasked with creating and working with teams to lead and create these standards for said organizations, and as an architect, I find myself working within these boundaries while also creating new boundaries.

Of course, what worked 10 or 15 years ago (or even 3 years ago) may not be the most optimal way forward for the organization, so new standards get created, and often the expectation is for all new solutions and applications to follow the new standards. Teams are created to come up with standards and best practices which are eventually agreed upon and broadcast to the rest of the organization to follow. When considering solution and application architecture, standards can be used, as an example, to define how systems should interact with each other (REST API’s, microservices, etc), how software is built (design patterns, frameworks, ORM, languages, platforms, etc), how integration architecture is applied (batch, queues, APIs), and so forth.

Problems arise when standards become too rigid which force solutions into target states that are not ideal based on the existing landscape. What is ideal and what is not is based on many factors, including cost, complexity, timing, maintenance, technical debt, and technical expertise. Although I advocate to challenge standards when necessary, it is important to realize that target architecture and design standards need to provide room and mechanism for deviations from those standards without a lot of bureaucracy. They also can’t be so lax that people are tempted to just do it the way they’ve always done it.

Let’s consider an organization which wants to enforce a target state architecture that ensures that all integration between systems is done through an API service. The organization has procured all of the technology in order to do this, which includes, an API Management layer, an API Gateway, and cloud infrastructure to orchestrate API calls between systems on-premise, in their private cloud, and with SaaS cloud solutions.

Let’s look at a new SaaS solution being brought into the enterprise that an architect is responsible for creating the Solution and Integration Architecture. The architect does his or her due diligence to work with the vendor to understand the possible ways to push and pull data. Although this particular solution doesn’t support a direct Rest API integration, the architect pushes and works with the SaaS vendor to get this capability baked into the solution. It turns out this capability is part of the vendor’s roadmap, but there is no definite timeframe, so we can’t expect a Rest API to be available anytime soon.

Without the Rest API capability, the organization, which requires critical integration of master, reference, and transactional data to/from the SaaS application, is limited to SFTP transfers of flat files on a regular schedule. Generally, this type of integration would be pretty straightforward, but unfortunately it would violate the enterprises recently executive signed-off standards directives.

But, there are still ways, given what we have been dealt with, to meet the organizations target state and slide this through. It doesn’t mean it’s the right thing to do, and in this case it isn’t, but let’s take a look at what would be built if mandated standards had to be strictly enforced.

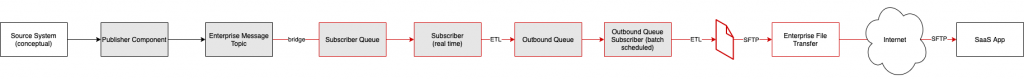

Let’s consider just a single integration flow for a minute. If we didn’t have to worry about the new defined target state architecture to adhere to, we’d end up with something like the following:

It’s pretty straightforward. It supports multiple sources being able to messages onto a common outbound queue where the logic to send to the SaaS application is encapsulated and re-useable. We can re-use existing publishers in a publisher/subscriber messaging implementation. We simple create a new subscriber to subscribe to the real time data and push it to a new subscriber queue which picks it up on a schedule (it could be daily or even near real time if necessary) creates the XML and does some transformation before pushing that off to the Enterprise File Transfer platform which takes care of the rest and securely provides the data to the SaaS solution.

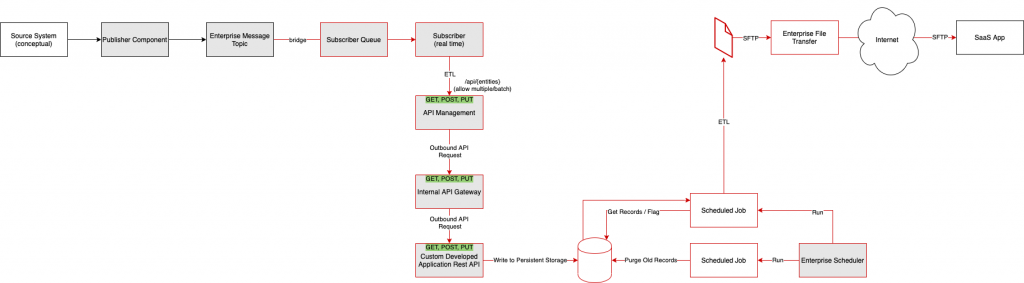

But, we have that mandatory target state using service based architecture to worry about, so, let’s look at how we could actually be “successful” in meeting the organizations signed off target state architecture. Take a look at the following:

As you can see, we’ve met the “target state architecture” by wrapping all of the inner workings around a REST API Management layer with its own internal workings. You could call that being “successful” or something that is “fun” to architect, but it’s wrong and it should never see the light of day.

Let’s look at the added complexity this “target state” compliant version of the architecture adds:

- Solution still needs to consume the same data from the source system and can get that in real time from the existing messaging interface, so we are limited to the existing messaging infrastructure from the source system

- Many more additional points of failure

- Performance concerns in the API Management layer

- Outbound API needs to write the data out in real time to storage (queue, persisted table, etc) only to be consumed later by a batch process that pushes the data to the SaaS solution

- Just because we write to the API, we don’t know the status of the information as the rest of the processing happens asynchronously, so we need to implement another API endpoint to handle that and track internally as well

- Need to consider supporting PUT, POST, and GET operations with the API as well as allowing PUT and POST on single and bulk list of entities

Now, there is the potential benefit that in the future the SaaS solution builds their API and you can just adapt your current API Management layer to send outbound API requests to the SaaS solution, but keep in mind this won’t be 100 percent straightforward and you do not yet know the complexity of the vendor’s Rest API. I’m a huge proponent of YAGNI (you ain’t gonna need it!) and LRM (last responsible moment) principles. Don’t build this now, don’t over architect this now. Build it when and if you need it because likely, you ain’t gonna need it.

It’s important that we are not so rigid in ensuring solutions are built to an ideal target state or set of ideals. Being pragmatic ensures we are thinking about the actual approach for the job, ensuring that the architectural decisions made are made on a sound basis taking into account cost, complexity, maintenance, technical debt, speed to market, and what is most practical. These are very important points because an architect should not be creating unnecessary complexity by over-architecting solutions even if it is required to meet some “mandatory” target state. It doesn’t mean let’s do what’s easy and only what’s easy. It means finding a balance between cost, complexity, maintenance, and target state. If you find yourself architecting or implementing a solution where one of these items is out of balance, you need to re-think it.

There should not be rigid and mandatory target states in an organization, generally, and it’s important for the teams’ setting the standards, executives approving the standards, and those implementing solutions based on those standards to all have a solid understand that everything is negotiable. Architecture is negotiable and it always should be. As an architect you should be prepared to sell your solution even if the solution does not meet “mandatory” target states. Your solution should not be based only on meeting the target state, but based on the pragmatic points the organization and your business stakeholders care most about, and that is based on a variety of things, including, cost (short and long term) business value, and complexity.